Video Games and Online Chats Are ‘Hunting Grounds’ for Sexual Predators

Criminals are making virtual connections with children through gaming and social media platforms. One popular site warns visitors, “Please be careful.”

By

NELLIE BOWLES and

MICHAEL H. KELLER DEC. 7, 2019

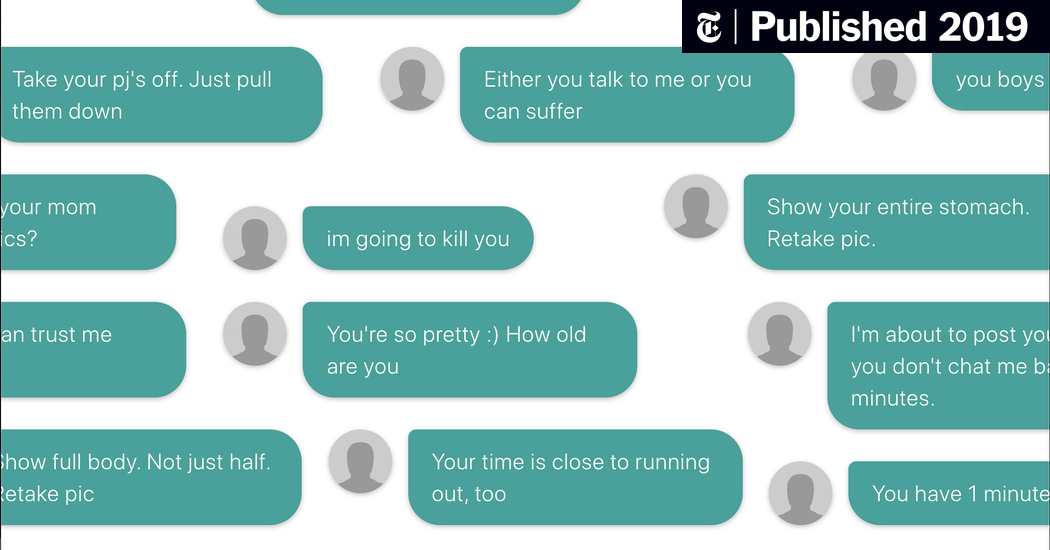

You have 5 seconds to take each pic

Take your pj's off. Just pull them down

Show your entire stomach. Retake pic.

I'm about to post your pics If you don't chat me back in 10 minutes.

Either you talk to me or you can suffer

You're so pretty

How old are you

Your time is close to running out, too

DO you want your mom seeing your pics?

Show full body. Not just half. Retake pic

You have 1 minute. Hurry up

I mean you can trust me right

Show us that you’re crying

Conversations excerpted from court documents

When Kate’s 13-year-old son took up Minecraft and Fortnite, she did not worry.

The video games were hardly Grand Theft Auto — banned in their home because it was too violent — and he played in a room where she could keep an eye on him.

But about six weeks later, Kate saw something appalling pop up on the screen: a video of bestiality involving a young boy. Horrified, she scrolled through her son’s account on Discord, a platform where gamers can chat while playing. The conversations were filled with graphic language and imagery of sexual acts posted by others, she said.

Her son broke into tears when she questioned him last month.

“I think it’s a huge weight off them for somebody to step in and say, ‘Actually this is child abuse, and you’re being abused and you’re a victim here,’” said Kate, who asked not to be identified by her full name to protect her family’s privacy.

Exploited

Articles in this series examine the explosion in online photos and videos of children being sexually abused. They include graphic descriptions of some instances of the abuse.

Sexual predators and other bad actors have found an easy access point into the lives of young people: They are meeting them online through multiplayer video games and chat apps, making virtual connections right in their victims’ homes.

The criminals strike up a conversation and gradually build trust. Often they pose as children, confiding in their victims with false stories of hardship or self-loathing. Their goal, typically, is to dupe children into sharing sexually explicit photos and videos of themselves — which they

use as blackmail for more imagery, much of it increasingly graphic and violent.

Reports of abuse are emerging with unprecedented frequency around the country, with some perpetrators grooming hundreds and even thousands of victims, according to a review of prosecutions, court records, law enforcement reports and

academic studies. Games are a common target, but predators are also finding many victims on social platforms like Instagram and Kik Messenger.

A gamer at DreamHack, a festival held last month in Atlanta. Kholood Eid for The New York Times

The New York Times

reported earlier this year that the tech industry had made only tepid efforts to combat

an explosion of child sexual abuse imagery on the internet. The Times has also found that the troubled response extends to the online gaming and chat worlds, where popular and successful companies have created spaces that allow adults and children to interact, despite efforts to create some safeguards.

There are tools to detect previously identified abuse content, but scanning for new images — like those extorted in real time from young gamers —

is more difficult. While a handful of products have detection systems in place, there is little incentive under the law to tackle the problem as companies are largely not held responsible for illegal content posted on their websites.

“Our society says we’re going to protect kids in the physical world, but we’ve yet to see that in the same way on the digital side,” said Steven J. Grocki, who leads the child exploitation and obscenity section at the Justice Department.

A spokesman for Discord said in a statement that the company had a “zero-tolerance policy for any illegal activity.”

“No parent should have to worry that their child is exposed to inappropriate content,” he added, “and we deeply empathize with the challenges that families face in protecting their children online.”

Six years ago, a little over 50 reports of the crimes, commonly known as “sextortion,” were referred to the federally designated clearinghouse in suburban Washington that tracks online child sexual abuse. Last year, the center received over 1,500. And the authorities believe that the vast majority of sextortion cases are never reported.

There has been some success in

catching perpetrators. In May, a California man was

sentenced to 14 years in prison for coercing an 11-year-old girl “into producing child pornography” after meeting her through the online game Clash of Clans. A man in suburban Seattle got a

15-year sentence in 2015 for soliciting explicit imagery from three boys after posing as a teenager while playing Minecraft and League of Legends. An Illinois man

received a 15-year sentence in 2017 after threatening to rape two boys in Massachusetts — adding that he would kill one of them — whom he had met over Xbox Live.

“The first threat is, ‘If you don’t do it, I’m going to post on social media, and by the way, I’ve got a list of your family members and I’m going to send it all to them,’” said Matt Wright, a special agent with the Department of Homeland Security. “If they don’t send another picture, they’ll say: ‘Here’s your address — I know where you live. I’m going to come kill your family.’”

The trauma can be overwhelming for the young victims. An F.B.I. study reviewing a sample of sextortion cases found that more than a quarter of them led to suicide or attempted suicide. In 2016,

a Justice Department report identified sextortion as “by far the most significantly growing threat to children.”

It makes sense the gaming world is where many predators would go: It’s where the children are. Almost every single teenage boy in America — 97 percent — plays video games, while about 83 percent of girls do, according to

the Pew Research Center.

In many states, gaming counts as a team sport and can earn players

a varsity letter. Colleges offer scholarships to elite gamers, and cities are racing to establish professional teams. The industry is enormously profitable, generating over $43 billion in revenue last year in the United States.

There are many ways for gamers to meet online. They can use built-in chat features on consoles like Xbox and services like Steam, or connect on sites like Discord and Twitch. The games have become extremely social, and developing relationships with strangers on them is normal.

In many instances, the abusive relationships start in the games themselves. In other cases, adults posing as teenagers move conversations from gaming sites and chat rooms to platforms like Facebook Messenger, Kik and Skype, where they can communicate more privately.

To report online child sexual abuse or find resources for those in need of help, contact the

National Center for Missing and Exploited Children at 1-800-843-5678.

“These virtual spaces are essentially hunting grounds,” said Mary Anne Franks, a professor at the University of Miami School of Law and president of the Cyber Civil Rights Initiative, a nonprofit group dedicated to combating online abuse.

One platform frequently used by predators is the video chat site Omegle — users need look no further than the site’s home page to find that out. “Predators have been known to use Omegle, so please be careful,” the site advises under a banner that exclaims, “Talk to strangers!” Omegle did not respond to requests for comment.

This fall, the F.B.I. rolled out an awareness campaign in middle and high schools to encourage children to seek help when caught in an exploitive sexual situation. “Even if you accepted money or a game credit or something else, you are not the one who is in trouble,”

material from the campaign explains.

All of it worries Bubba Gaeddert, the executive director of the

Varsity Esports Foundation, which gives high schools financial assistance to promote “healthy gaming habits” and develop students’ science and technology skills.

Every time there’s a new report of predatory behavior, he said, people ask, “Who’s to blame — schools, society, parents?”

“Well, it’s all of us.”

Playing With Strangers

It was a gamer’s paradise. Last month, 35,000 people had registered for a video game conference in Atlanta called DreamHack, a weekend-long festival for fans to meet and play and for companies to showcase new offerings.

There were exhilarated gamers everywhere, some passed out on sofas from exhaustion.

Ben Halpert was not one of them. As he surveyed the exhibition space, he saw potential peril around every corner. Mr. Halpert runs

Savvy Cyber Kids, a nonprofit focused on online safety, a subject he was there to talk about.

You’ve been tricked. I’m a guy. And I will email all the pics u sent to your school I would not block me or delete kik, because if you do that, I'm still sending it to your school. If you obey me and do everything I want, then I will delete your pics and I will not email your school. But I warn you, if you disobey me once, I will send your pics

Okay what do I have to do?

It’ll involve nudes

Take ur bra off and show your boobs

I’m crying

Please is there anything else I can do?

No. I will let you know when we are done.

If I don’t send you nudes, what is my other option?

There is literally no other option.

Please give me another option.

Source: United States District Court for the Middle District of Louisiana.

Note: This conversation has been edited for clarity.

“Tech has made it easier for predators to get our kids faster and more efficiently,” he said, adding that it made children vulnerable by “normalizing communication with strangers.”

Today, even games meant for small children, like on Roblox, allow players to chat with others. His own daughter was just 6, Mr. Halpert said, when another player in a children’s animal game asked who she was.

“I didn’t even know they could chat,” he said. “It had nothing to do with gameplay, and none of the other animals were talking to each other. That was someone reaching out to my daughter.”

After making contact, predators often build on the relationship by sending gifts or gaming currency, such as V-Bucks in Fortnite. Then they begin desensitizing children to sexual terms and imagery before asking them to send naked pictures and videos of their own.

“Parents aren’t telling their kids at 6 years old, ‘Keep your clothes on online,’” Mr. Halpert said. “But they need to.”

[Read experts’ advice to parents on internet safety.]

Mr. Halpert had arranged to hold a panel on sextortion at DreamHack, but it proved difficult to find experts to join the discussion, he said. Some prominent cosplayers, a game community manager and a former e-sports professional sat onstage with him, but they focused on other problems like cyberbullying.

Ben Halpert, head of Savvy Cyber Kids, an online safety nonprofit. The audience for his DreamHack panel on sextortion. A recording of the panel, which he found difficult to organize because of the topic.

Kholood Eid for The New York Times

When he brought up grooming, the panelists fell silent or changed the subject.

The audience, too, was quiet. In a festival with tens of thousands of attendees, Mr. Halpert’s talk had attracted just a half-dozen.

“People don’t want to talk about it,” he said.

Catching the Predators

New Jersey police departments were flooded with phone calls from parents and teachers, alarmed about pedophiles lurking on game sites and in chat rooms.

And so law enforcement officials from across the state took over a building near the Jersey Shore last year, and started chatting under assumed identities as children.

In less than a week, they arrested 24 people.

Nearly “every game has a chat, so it’s hard for parents to keep track, even if they’re doing their homework,” said Lilianne Daniel, a deputy attorney general in New Jersey and one of the operation’s leaders. “So we realized there were these unregulated conversations happening.”

“We needed to take the next step,” said Christine Hoffman, an assistant attorney general who helped lead the sting.

The authorities did it again, this time in Bergen County, a suburb close to New York City. They made 17 arrests. And they did it once more, in Somerset County, toward the center of the state, arresting 19. One defendant was sentenced to prison, while the other cases are still being prosecuted.

After the sting, the officials hoped to uncover a pattern that could help in future investigations. But they found none — those arrested came from all walks of life. Among them were a police officer, a teacher, a minister, a nurse, a bank manager, a mechanic, a waiter, a dental hygienist, a college student and a deliveryman.

“It cuts across all social and racial lines, across class lines — it cuts across every line,” Ms. Hoffman said. “There is no profile.”

When announcing the arrests, the authorities highlighted Fortnite, Minecraft and Roblox as platforms where suspects began conversations before moving to chat apps. Nearly all those arrested had made arrangements to meet in person.

In a separate case in Ohio, the digital abuse of a young boy led to his physical abuse. The offender, Jason Gmoser, would encourage boys to show their genitals while on PlayStation, according to court records. Mr. Gmoser, who was found with over 500 videos recorded while gaming with boys, often offered gift cards that could be used on the network.

He told detectives in 2014 that he spent years interacting with an 8-year-old who had appeared in several of the videos, including one in which the boy exposed himself and said he would “do anything” for a $20 gift card.

Mr. Gmoser traveled to Missouri to visit the boy and his family, showering them with gifts and paying some of their bills. On at least one trip, he said, he sexually abused the child. He is now serving a life sentence in a separate case, for running a child sexual abuse site on the dark web.

Lilianne Daniel, a deputy attorney general in New Jersey, and Christine Hoffman, an assistant attorney general, who helped lead a raid on online predators. Lt. John Pizzuro, who took part in the operation, with the game Minecraft.

Kholood Eid for The New York Times

Any young person who converses online with strangers is at risk.

In 2018, three men from across the country were

convicted of running a sextortion ring for years that lured hundreds of children, some as young as 8, from social and video-streaming platforms that included LiveMe; Omegle; Musical.ly, the predecessor of TikTok; Skype; Snapchat; and Twitter’s Periscope. The men pretended to be teenage boys and girls and coerced children into undressing and performing explicit acts. They shared files and information about the victims over Discord.

In another case, a girl attending high school in Tennessee thought she had made a new female friend on Kik Messenger. They both loved volleyball, and they even resembled each other physically, their profile photos showed. They chatted for six months.

After the teenager shared a partially nude photo of herself, the “friend” became threatening and demanded that she record herself performing explicit acts. “You literally have no choice but to obey unless u want ur pics spread to your friends,” the person wrote, according to court records.

The girl told her mother, who called the police. The offender was a Louisiana man, Matthew Chaney Walker, who was 24 years old at the time of the chat in 2014. The police said Mr. Walker, now in prison, had forced more than 50 girls to send him nude and sexually explicit photos.

The photos of the Tennessee teenager were never shared publicly, but she said in an interview with The Times that she was haunted by the experience years later.

“I thought for a long time that there was something wrong with me or that I was a bad person,” she said. “Now that I’ve gotten to college, I’ll talk to my friends about it, and there have been so many girls who have said, ‘That exact same thing happened to me.’”

An Industry Without Answers

There are a few seemingly simple protections against online predators, but logistics, gaming culture and financial concerns present obstacles.

Companies could require identification and parental approvals to ensure games are played by people of the same age. But even as some platforms have experimented with programs like

Real ID, a verification effort, gamers have resisted giving up anonymity.

“There’s been community-layer rejection of those systems because people like to be able to be anybody,” said Todd Harris, who co-founded Hi-Rez, a game development studio based in Atlanta.

While Facebook has algorithms that can detect some text-based grooming, many gamers use audio and video chat. And eliminating audio and video interactions would be a death sentence for a gaming company fighting for customers. “You can’t seriously compete without talking,” Mr. Harris said. “The team with the best communication will win.”

In 2016, an industry coalition was formed to create a common front on online bullying, hate speech and child exploitation, but officials with the group said they would not publish a series of guidelines for companies until next year.

Separately, some gaming companies deploy automated systems they say can detect some grooming behaviors, including attempts to move a chat off platform. Roblox, for instance, uses software from Two Hat Security, a Canadian firm, designed to block explicit language and contact information.

The company takes other measures, a Roblox spokesman said, prohibiting users from asking about names and ages. For children under 13, the spokesman said, filters are more aggressive.

Microsoft, which owns Xbox and the popular game Minecraft, said it planned to release software early next year that could recognize some forms of grooming and sextortion. The company said it would offer the software to other tech businesses free of charge.

Marc-Antoine Durand, the chief operating officer of Yubo, a video chat app based in France that is popular among teenagers, said it started working this year with a company called Yoti to analyze users’ selfies and estimate their ages. Yubo also examines profile information to separate adults from minors, Mr. Durand said, and watches for grooming behavior with the Two Hat software.

Yubo disables accounts when it finds an age discrepancy, often requiring users to provide a government-issued ID. But users frequently object to providing documentation, and many children do not possess it. In February, a 26-year-old Ohio

man was charged with sexual exploitation after claiming to be 13 on Yubo and luring a 12-year-old girl, the authorities said.

A Facebook spokeswoman said it had made a variety of efforts to separate adults from children, including limiting how adults can message and connect with them. The Times was able to find minors by looking at users’ lists of friends and activities on pages popular with children.

I’m about to post your pics If you don’t chat me back in 10 minutes

Sorry. I haven’t been on since thanksgiving morning. Ive had a lot of stuff going on with my mom in the Hospital.

I’m with my little sister my aunt , and my granda . I can’t talk right now.

Yes you can do u want me to post your pics everywhere?

I only want 10 more pics and I will leave you alone forever If not I will show everyone

Okay I swear I will get you 10 pics by tonight. Can you tell me what 10 pics you want,.? I swear I will get them to you tonight.

Ok how old is your sis baby?

Send some pics of her instead

Wait. What kind of pics????

My little sister is 7 years old. I’m not going to take naked pictures of her.

Ok well I will post your pics then I want a few of her and you ok

Please Thats my little sister. I can’t do that to her. I beg you. Please

Source: United States District Court for the Middle District of Florida.

Note: This conversation has been edited for clarity.

Instagram, owned by Facebook, does not have the same restrictions. Until this past week, it had allowed users to send private messages to anyone, and a Times reporter was able to contact and video-chat with a 13-year-old girl who had a private account (the girl and her parents gave permission to conduct the test). After The Times asked about the policy, Instagram announced new features on Wednesday that allow users to block messages from people they do not follow. The company said it would also require users to enter their age when signing up.

Other companies have taken a more hands-off approach, citing privacy concerns.

A member of the trust and safety team at Discord said that it scanned shared images for known illegal material, and that moderators reviewed chats considered a high risk. The company does not, however, automatically monitor conversations for grooming, suggesting it would be unreliable and a privacy violation.

“We use industry-leading technology such as photo-detection software to scan every image and video for child pornography,” a Discord spokesman said.

Some of the biggest gaming companies provided few, if any, details about their practices. Epic Games, the creator of Fortnite, which has roughly

250 million users, did not respond to multiple messages seeking comment.

Sony, the maker of PlayStation, which had nearly 100 million monthly active users

earlier this year, said it took sextortion seriously, pointing to its

tutorials on parental controls and tools that let users report abusive behavior.

But the solution many game developers and online safety experts return to is that parents need to know what their children are playing, and that children need to know what tools are available to them. Sometimes that means blocking users and shutting off chat functions, and sometimes it means monitoring the games as they are being played.

“‘Literacy’ is the word I say a billion times a day,” said Mr. Gaeddert, of the e-sports foundation.

‘A Friend of Somebody’

When Kate started scrolling through her son’s Discord account, she saw how the sexualized chats had unfolded. The imagery became increasingly disturbing, moving from innocuous anime figures to pornographic illustrations — and finally to actual children being abused.

So Kate started asking her son about some of the user names of his fellow gamers. “And he’s saying, ‘That’s so-and-so who goes to this school.’ And they all think it’s a friend of somebody,” she said, “but then they realize it’s not a friend of anybody.”

Kate reached out to Discord to alert them to the problem. The company wrote back saying that because her son had deleted the messages, it no longer had the data and could take no action.

“First I was very sad, but I’m really angry,” she said.

At DreamHack, the festival in Atlanta, many parents accompanying their children described how confusing it was to keep track of the various chats and streams their young gamers use.

“We’re not gamers, so we’re relying on our son to tell us about how it all works, which is probably naïve,” said John Marshall of Tuscaloosa, Ala., whose 17-year-old son was competing at the festival with his high-school gaming team.

Parents who attended DreamHack said they were confounded by the volume of new apps to which their children had access.

Kholood Eid for The New York Times

His wife, Rhonda, compared the predicament to watching a child get behind the wheel of a car: “You have to trust them.”

Ms. Marshall said it was also hard to ignore the potential upside of their son’s gaming. “He kept telling me, ‘Mom, you told me I shouldn’t spend so much time on computers, but I can get scholarships for this,’” she said.

Still, there’s no hiding the dangers. Kristy Custer, the principal at Complete High School Maize in Kansas, helped design the curriculum used by many high-school e-sports teams.

“Right now, in the curriculum, we have a section on, ‘If this happens to you, this is what you do,’” she said. “But we probably need to say, ‘

When this happens to you, this is what you need to do.’”

Dr. Custer said parents should react carefully when their children report encounters with online predators. Punishing the children — no more video games or social media, for example — could backfire by pushing them into even more dangerous places for their online activity.

“You just did exactly what that predator wanted them to do — and drove them into the darker space,” she said.

Gabriel J.X. Dance contributed reporting.

Produced by Aliza Aufrichtig, Rich Harris, Virginia Lozano and

Popular video-sharing app TikTok has agreed to pay $5.7 million to settle allegations that it illegally collected personal information from children under the age of 13, such as names, email addresses and their location.

Popular video-sharing app TikTok has agreed to pay $5.7 million to settle allegations that it illegally collected personal information from children under the age of 13, such as names, email addresses and their location.